Neural Kriging¶

Neural Kriging is an interpolation method that estimates values at unknown locations based on surrounding data points. It is inspired by traditional geostatistical methods like inverse distance weighting (IDW) and kriging. It incorporates a trainable neural network to enhance predictions.

A key feature of Neural Kriging is that users do not need to define interpolation parameters. The model automatically learns the optimal distance weighting, rotation, and influence of secondary variables based on the dataset - reducing bias and improving accuracy. This makes it an adaptable tool for geologists looking to model spatial data without extensive parameter tuning. This automated learning approach is particularly useful in geosciences, where geological and geochemical patterns are often nonlinear and influenced by multiple factors.

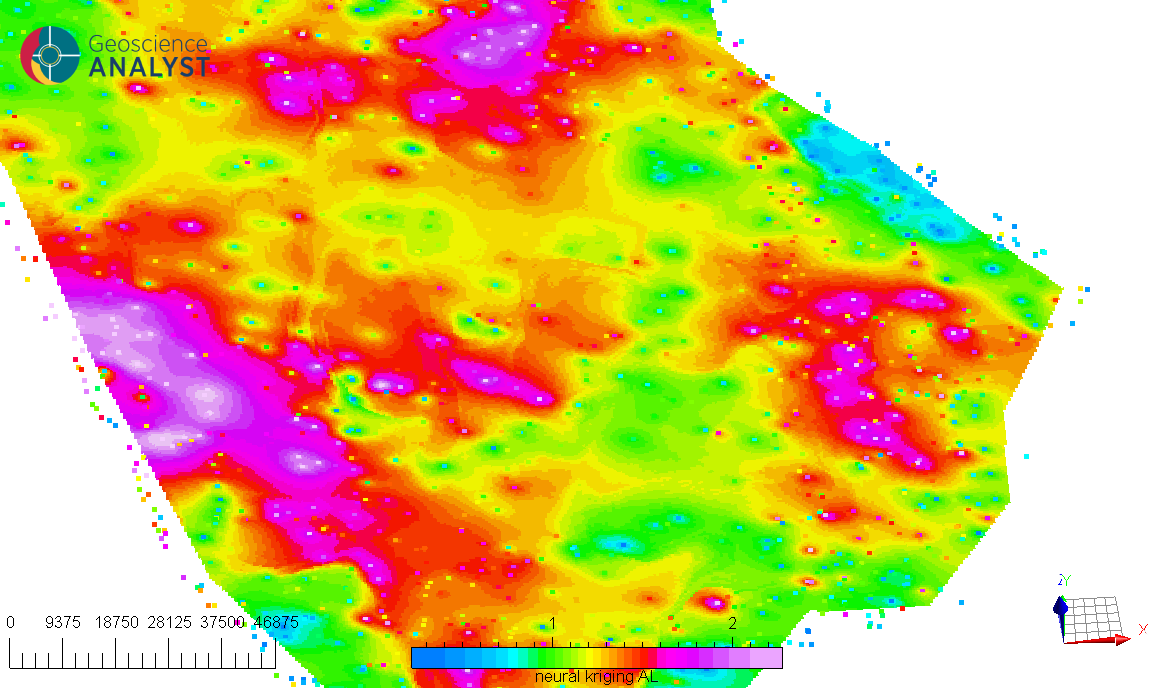

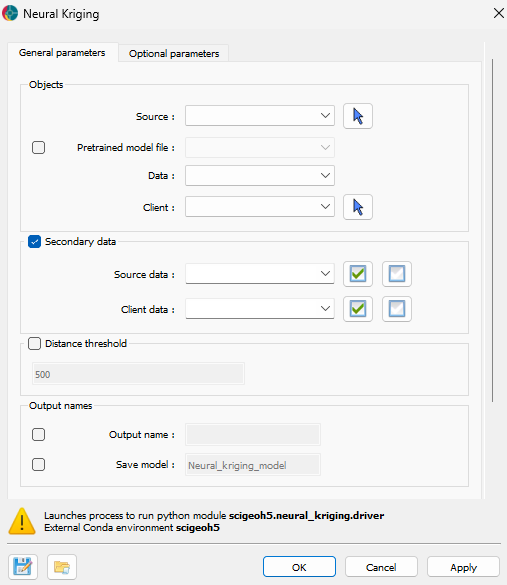

Interface¶

The application only requires the data to interpolate and the object where the interpolation will be saved. The interface is presented in the Fig. 2.

Fig. 2 Neural Kriging Interpolation main interface¶

Object selection¶

Source: The object containing the data to use for the interpolation.

Pretrained model file (optional): If users have a pretrained model, they can load it here. It must be associated to the source object. The data the model was trained on must be present in the source object.

Data: The primary data to interpolate.

Destination: The object on which the data will be interpolated.

Secondary data (optional)¶

If activated, users can include secondary data for the interpolation. The secondary data must be named the same in both the source and client objects. The following options are available:

Source: The secondary data from the source object.

Client: The secondary data from the client object.

Distance threshold (optional)¶

Distance threshold: If activated, only points within the specified distance to source points will be interpolated.

Output names¶

Interpolated: The name of the output variable.

Save model (optional): If activated, users have to define the name of the modelto save in the source object.

Advanced parameters¶

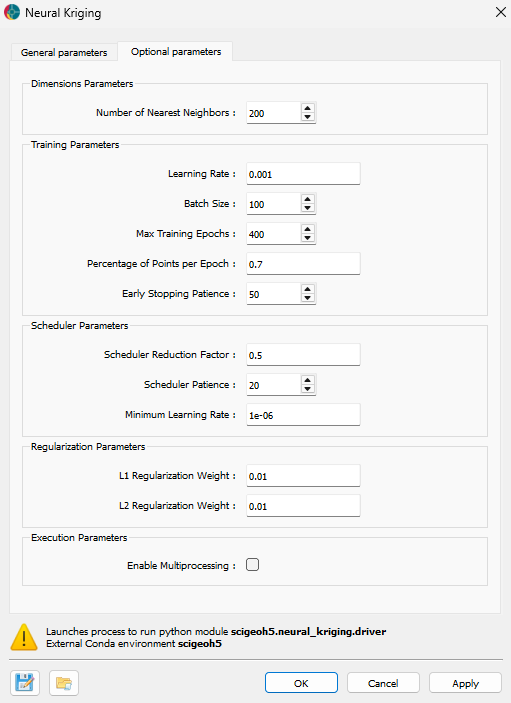

The application provides several advanced parameters to customize the interpolation process. The Fig. 3 shows the available options. The default values should work well for most cases.

Fig. 3 Neural Kriging Interpolation advanced parameters interface¶

Dimensions parameters¶

Output probabilities: Output probabilities along interpolated values for referenced data.

Number of nearest neighbors: Specifies the number of closest data points used for interpolation. A higher number of neighbors smooths predictions but increases computation time, while a lower number preserves local variations but may introduce noise.

Training parameters¶

Learning rate: Controls how quickly the model updates during training. A higher learning rate speeds up convergence but risks instability, whereas a lower learning rate ensures more gradual, stable learning at the cost of longer training times. If the interpolation converge into a non-optimal solution, users can try to reduce the learning rate.

Batch Size: Defines the number of data points processed at once during training. Larger batch sizes improve computational efficiency but may generalize poorly, while smaller batch sizes capture finer details but require more iterations.

Max training epochs: The maximum number of times the model processes the dataset. More epochs allow for better learning but increase runtime. Early stopping mechanisms prevent unnecessary computations when the model stops improving.

Percentage of points per epoch: Specifies the fraction of available data used in each training epoch. A higher percentage stabilizes training but increases computation time, while a lower percentage introduces more randomness, potentially enhancing generalization.

Early stopping patience: Defines how many epochs the model will continue training without improvement before stopping. A higher patience prevents premature termination but may lead to overfitting.

Scheduler parameters¶

Scheduler reduction factor: Determines the step size for reducing the learning rate when training stagnates. A lower factor results in smaller adjustments, while a higher factor decreases learning rate more aggressively.

Scheduler patience: Specifies the number of epochs without improvement before reducing the learning rate. A higher patience delays learning rate adjustments, while a lower patience makes adjustments more frequent.

Minimum learning rate: Defines the lowest value the learning rate can reach during training, ensuring that training does not completely stop even after multiple reductions.

Regularization parameters¶

L1 regularization weight: Encourages sparsity in the model by penalizing large weights. A higher L1 weight reduces complexity but may remove useful patterns, while a lower weight allows for more flexibility.

L2 regularization weight: Prevents large weight values, improving generalization. A higher L2 weight enhances stability but may underfit data, while a lower weight provides more adaptability.

Execution parameters¶

Enable multiprocessing: Allows parallel processing to speed up training. Enabling this feature reduces computation time but requires more system resources. The multiprocess will be run on half the cpu cores available.

Methodology¶

As presented in the Fig. 4, this application aims to find the optimal weights of surrounding points to predict the value at a target location. This section explains how those weights are calculated.

Fig. 4 Interpolating a point by weighting surrounding points.¶

Neural Kriging follows a step-by-step process to calculate interpolated values:

Compute the distance to neighboring points in 3D space ((X, Y, Z)).

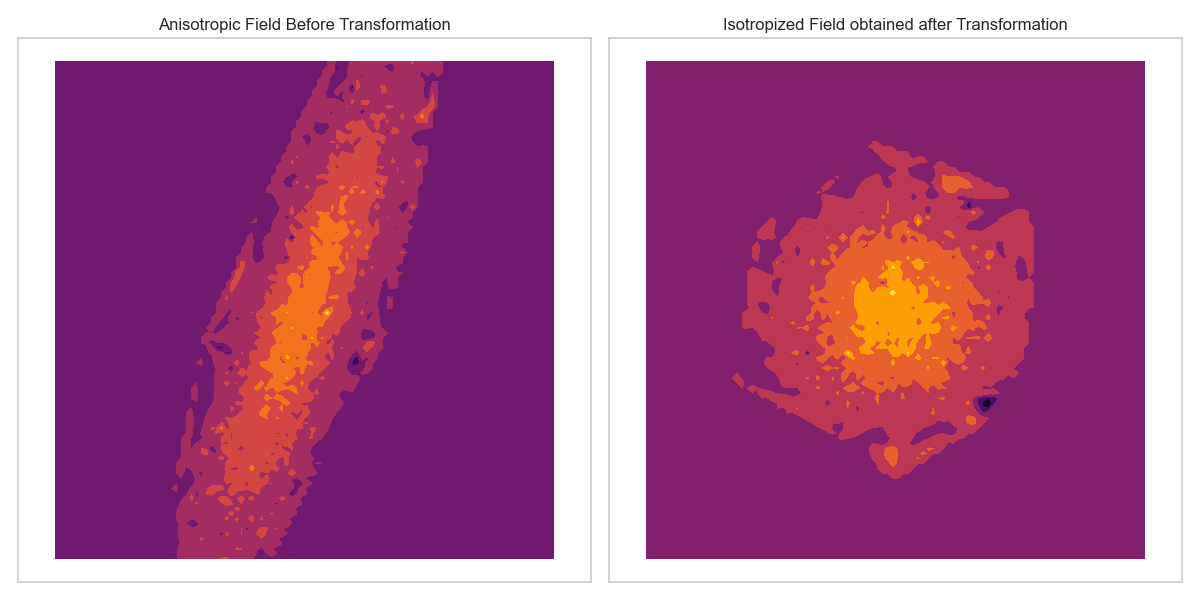

\[\delta_{\text{distance}} = (X_{\text{point}} - X_{\text{neighbor}}, Y_{\text{point}} - Y_{\text{neighbor}}, Z_{\text{point}} - Z_{\text{neighbor}})\]Apply a trainable transformation matrix to adjust for anisotropy.

\[\delta_{\text{rotated}} = \mathbf{R} \cdot \delta_{\text{distance}}\]

This transformation matrix (\(\mathbf{R}\)) enables the model to learn the optimal linear transformations of the space, such as anisotropy, rotation, and shearing, as illustrated in the Fig. 5. This process allows subsequent steps to be performed in an isotropic space.

Fig. 5 Deformation of space to account for anisotropy.¶

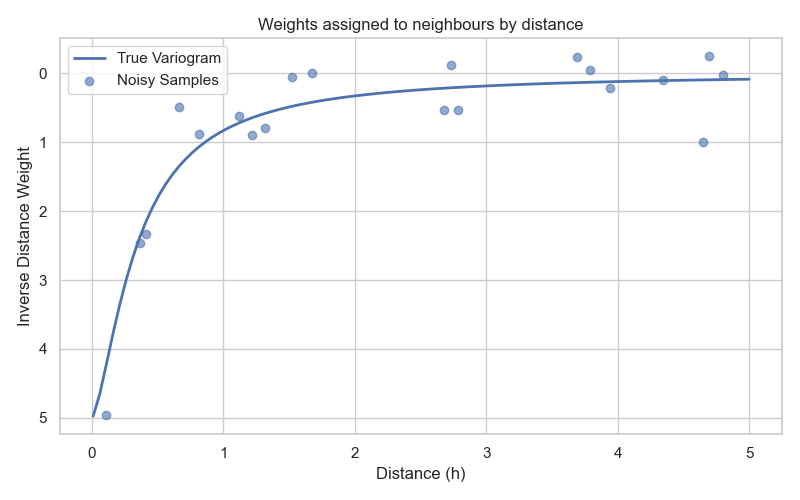

Compute interpolation weights using the transformed distances and trainable range and nugget parameters for each X, Y, Z axis:

\[\text{weights} = \frac{1}{\textit{nugget} + \|\delta_{\text{rotated}}\|^{\textit{range}}}\]

This function allows to assigned weights based on distance, similar to IDW. It also include the concept of kriging : a range parameter controls how quickly weights decrease with distance, while the nugget parameter prevents weights from becoming excessively large for very close points, as illustrated in Fig. 6.

Fig. 6 Exponential function defining the weights based on distance.¶

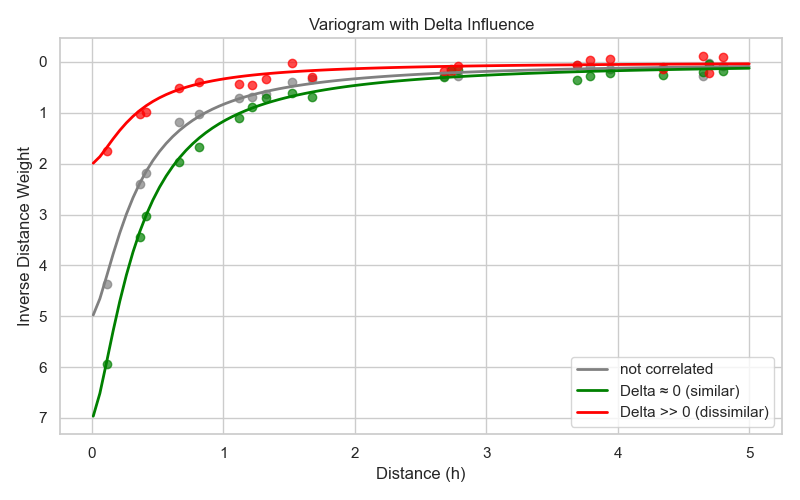

Modify the weights using a trainable neural network (one layer only) that incorporates secondary variables. This difference between the predicted and actual secondary variable values (\(\delta_{\text{secondary variable}}\)) is used as input to the neural network:

\[\text{weights} = \text{weights} \times \textit{NeuralNetwork}(\delta_{\text{secondary variable}})\]

The neural network determines a factor that modifies the weights. As illustrated in Fig. 7, if a secondary variable is positively correlated with the primary variable, the neural network increases the weights for points with similar secondary variable values. If the secondary variable is negatively correlated, it decreases the weights. This enables the model to adaptively adjust the influence of surrounding points based on additional information. If the secondary variables are not correlated with the primary variable, the neural network will learn to output a factor of 1, effectively leaving the weights unchanged. If no secondary variables are provided, this step is skipped.

Fig. 7 Modification of weights based on secondary variables difference.¶

For examples, the Fig. 8 illustrates an interpolation (A) conducted with no secondary data. On this interpolation, a clear NW-SE anisotropy is visible. For comparison, the same interpolation is performed (B) but this time including a secondary variable (C) that is correlated with the primary variable. In this scenario, the model learns to increase the weights of points with similar secondary variable values, resulting in an interpolation deformed to align with the anomalies of the secondary variable.

Fig. 8 Example of the influence of secondary variables on the interpolation; (A) interpolation without secondary variable, (B) interpolation with secondary variable, (C) the “interpreted basement domains anomalous apparent susceptibility” used as secondary variable.¶

Compute the final predicted value using a weighted sum:

\[\hat{V} = \frac{\sum (\text{weights} \times \text{values})}{\sum (\text{weights})}\]

The final prediction uses the values of surrounding points, weighted by the learned weights based on distance and secondary variables, to estimate the value at the target location. During training, the target location is known, and the model is trained to minimize the difference between the predicted value and the actual value at that location, as shown in the Fig. 9. Training is performed on a subset of the data (Percentage of points per epoch option), and the model is optimized to generalize well to unseen locations.

Fig. 9 Training the model to minimize the error between predicted and actual values.¶

Pre-processing¶

The following pre-processing steps are performed by the function before applying the interpolation:

- Filtering Points:

Only points containing valid values are kept for interpolation.

In the case of secondary variables, all points containing “no-data” values will be excluded.

- Secondary Variable Normalization:

Secondary variables are normalized by subtracting their mean and dividing by their standard deviation.

- Normalization of the Primary Data:

The data to be interpolated is normalized by subtracting its mean and dividing by its standard deviation.

After interpolation, the results are transformed back to the original scale by multiplying by the variance and adding the mean.

- Distance-Based Filtering (optional):

If activated, data further than a specified distance from the points to interpolate will not be interpolated.

Tutorial¶

The following animated image presents a tutorial on how to use the Neural Kriging Interpolation application.

Select the object containing the data to interpolate.

Select the data to be interpolated.

Select the object to project the interpolated data onto.

(Optional) Define the name of the output object.

(Optional) Select the secondary data for the source object.

(Optional) Select the secondary data for the client object.

(Optional) Define a distance threshold to limit the interpolation range.

Run the application.

Inspect the results.